Database System Concepts

7th Edition

ISBN: 9780078022159

Author: Abraham Silberschatz Professor, Henry F. Korth, S. Sudarshan

Publisher: McGraw-Hill Education

expand_more

expand_more

format_list_bulleted

Question

Transcribed Image Text:.

.

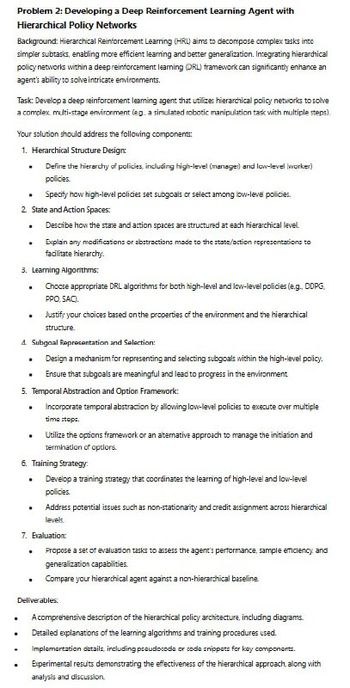

Problem 2: Developing a Deep Reinforcement Learning Agent with

Hierarchical Policy Networks

Background: Hierarchical Reinforcement Learning (HRL) aims to decompose complex tasks into

simpler subtasks, enabling more efficient learning and better generalization. Integrating hierarchical

policy networks within a deep reinforcement learning (DRL) framework can significantly enhance an

agent's ability to solve intricate environments.

Task: Develop a deep reinforcement learning agent that utilizes hierarchical policy networks to solve

a complex multi-stage environment (eg. a simulated robotic manipulation task with multiple steps).

Your solution should address the following components:

1. Hierarchical Structure Design:

Define the hierarchy of policies, including high-level (manager) and low-level (worker)

policies.

■ Specify how high-level policies set subgoals or select among low-level policies.

2. State and Action Spaces:

.

Describe how the state and action spaces are structured at each hierarchical level.

Explain any modifications or abstractions made to the state/action representations to

facilitate hierarchy.

3. Learning Algorithms:

.

Choose appropriate DRL algorithms for both high-level and low-level policies (e.g., DDPG,

PPO, SAC).

Justify your choices based on the properties of the environment and the hierarchical

structure.

4. Subgoal Representation and Selection:

.

Design a mechanism for representing and selecting subgoals within the high-level policy.

Ensure that subgoals are meaningful and lead to progress in the environment.

5. Temporal Abstraction and Option Framework:

Incorporate temporal abstraction by allowing low-level policies to execute over multiple

time steps.

Utilize the options framework or an alternative approach to manage the initiation and

termination of options.

6. Training Strategy:

⚫ Develop a training strategy that coordinates the learning of high-level and low-level

policies.

⚫ Address potential issues such as non-stationarity and credit assignment across hierarchical

levels.

7. Evaluation:

. Propose a set of evaluation tasks to assess the agent's performance, sample efficiency, and

generalization capabilities.

Compare your hierarchical agent against a non-hierarchical baseline.

Deliverables:

A comprehensive description of the hierarchical policy architecture, including diagrams.

Detailed explanations of the learning algorithms and training procedures used.

Implementation details, including pseudocode or code snippets for key components.

Experimental results demonstrating the effectiveness of the hierarchical approach, along with

analysis and discussion.

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by stepSolved in 2 steps with 11 images

Knowledge Booster

Similar questions

- Cyber Security answers pleasearrow_forwardQUESTION: Propose the knowledge representation (KR) for the Lane Detection Project, and also provide syntax and semantics for the same title which is lane detection. Hint 1: There are mainly four ways of knowledge representation which are given as follows: o Logical Representation o Semantic Network Representation o Frame Representation o Production Rules Hint 2: Syntax Rules for constructing legal sentences in the logic Which symbols we can use (English: letters, punctuation) How we are allowed to combine symbols Semantics How we interpret (read) sentences in the logic Assigns a meaning to each sentencearrow_forwardUsing suitable illustrations, discuss:A. The different flavours of machine learning and explain, showing details,where they apply in your network solution B. At least three deep learning aspects are involved in your networksolution C. At least three aspects of artificial intelligence in designing yourautonomous network solution Use the following report format to complete the questions above:Introduction;Brief description of the network (solution); Machine learning section; Deep learning section;Artificial intelligence section;Assumptions and recommendations.arrow_forward

- Why is the observation that all CRs are unique of value? A. It suggests that not all CRs are a function of expecting the US. B. It makes hedonic shift a prime example of where generality fails. C. It argues against the generality of laws of learning, leading to advances in understanding specialization. D. It suggests that uniqueness is a function of the CS and US and how they occur together, rather than a result of special forms of learning.arrow_forwardWhat do you understand by supervised and unsupervised learning? Mention theircharacteristics in a tabular form? What is active reinforcement learning? How it can beapplied in autonomous car driving?arrow_forwardIn this course, you learned key concepts and tools used in machine learning. Obviously, this field cannot possibly be covered in one course, regardless of length or learning modality. How would you describe your ability to relate the course concepts to real-world problems? If presented with a problem or challenge, how confident are you that this course has equipped you with the skills to build an appropriate machine learning model? If a particular machine learning topic of interest has not been covered, to what extent has this course has equipped you with the ability to expand your knowledge by learning on your own?arrow_forward

- For a simple base neural network using dropout modeling create the ensemble of the subnetwork. Illustrate the drop connect concept for the simple neural network.arrow_forwardShow how machine learning and deep learning models vary in training time, data, computation, accuracy, hyperparameter adjustment, and hardware dependencies.arrow_forwardShow the differences between machine learning and deep learning models in terms of the amount of time needed for training, the amount of data and processing power required, the level of precision required, the hyperparameters that may be modified, and the hardware dependencies.arrow_forward

- Which problems are best suited to supervised, unsupervised, or reinforcement learning?Justify your choices.arrow_forwardNatural Language Processing (NLP) employs diverse computational techniques to understandand generate human language, bridging the gap between human communication and machineunderstanding. Fuzzy logic, a mathematical framework, finds application in enhancing NLPsystems by accommodating linguistic uncertainties and nuances. By integrating linguisticvariables and fuzzy sets, it enables machines to interpret and generate language in a morehuman-like manner, advancing tasks such as sentiment analysis, language translation, anddialogue systems.In your role as a developer at XYZ Tech Solutions, you're tasked with designing a fuzzy logicsystem to enhance sentiment analysis in an NLP application. Consider a scenario where thesystem needs to analyze sentiments in diverse social media posts that range from stronglypositive to highly negative expressions. Your objective is to create a fuzzy logic controller thatcategorizes sentiments based on input linguistic variables.(a) Propose the relevant…arrow_forwardQ#1: Below mentioned is the abstract of the study. This article reports on a study for which the Unified Theory of Acceptance and Use of Technology (UTAUT) served as a theoretical framework. The purpose of the exploration was to gain an understanding of students’ perceptions of the adoption of social media, namely Facebook and Twitter, in an academic library setting. The study applied the constructs as described by the UTAUT, namely, performance expectancy, effort expectancy, social influence, and facilitating conditions, to explore students’ perceived behavioral intentions to use social media. A sample of 30 students were selected from two universities, one in Belgium (University of Antwerp) and one in South Africa (University of Limpopo), to gain better insight of the students’ perceptions regarding the adoption and use of social media, in particular Facebook and Twitter, by the academic libraries at these two universities. The…arrow_forward

arrow_back_ios

SEE MORE QUESTIONS

arrow_forward_ios

Recommended textbooks for you

Database System ConceptsComputer ScienceISBN:9780078022159Author:Abraham Silberschatz Professor, Henry F. Korth, S. SudarshanPublisher:McGraw-Hill Education

Database System ConceptsComputer ScienceISBN:9780078022159Author:Abraham Silberschatz Professor, Henry F. Korth, S. SudarshanPublisher:McGraw-Hill Education Starting Out with Python (4th Edition)Computer ScienceISBN:9780134444321Author:Tony GaddisPublisher:PEARSON

Starting Out with Python (4th Edition)Computer ScienceISBN:9780134444321Author:Tony GaddisPublisher:PEARSON Digital Fundamentals (11th Edition)Computer ScienceISBN:9780132737968Author:Thomas L. FloydPublisher:PEARSON

Digital Fundamentals (11th Edition)Computer ScienceISBN:9780132737968Author:Thomas L. FloydPublisher:PEARSON C How to Program (8th Edition)Computer ScienceISBN:9780133976892Author:Paul J. Deitel, Harvey DeitelPublisher:PEARSON

C How to Program (8th Edition)Computer ScienceISBN:9780133976892Author:Paul J. Deitel, Harvey DeitelPublisher:PEARSON Database Systems: Design, Implementation, & Manag...Computer ScienceISBN:9781337627900Author:Carlos Coronel, Steven MorrisPublisher:Cengage Learning

Database Systems: Design, Implementation, & Manag...Computer ScienceISBN:9781337627900Author:Carlos Coronel, Steven MorrisPublisher:Cengage Learning Programmable Logic ControllersComputer ScienceISBN:9780073373843Author:Frank D. PetruzellaPublisher:McGraw-Hill Education

Programmable Logic ControllersComputer ScienceISBN:9780073373843Author:Frank D. PetruzellaPublisher:McGraw-Hill Education

Database System Concepts

Computer Science

ISBN:9780078022159

Author:Abraham Silberschatz Professor, Henry F. Korth, S. Sudarshan

Publisher:McGraw-Hill Education

Starting Out with Python (4th Edition)

Computer Science

ISBN:9780134444321

Author:Tony Gaddis

Publisher:PEARSON

Digital Fundamentals (11th Edition)

Computer Science

ISBN:9780132737968

Author:Thomas L. Floyd

Publisher:PEARSON

C How to Program (8th Edition)

Computer Science

ISBN:9780133976892

Author:Paul J. Deitel, Harvey Deitel

Publisher:PEARSON

Database Systems: Design, Implementation, & Manag...

Computer Science

ISBN:9781337627900

Author:Carlos Coronel, Steven Morris

Publisher:Cengage Learning

Programmable Logic Controllers

Computer Science

ISBN:9780073373843

Author:Frank D. Petruzella

Publisher:McGraw-Hill Education