Question

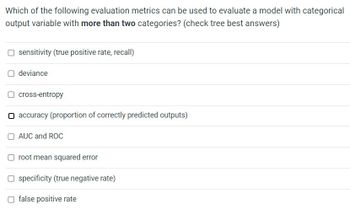

Transcribed Image Text:Which of the following evaluation metrics can be used to evaluate a model with categorical

output variable with more than two categories? (check tree best answers)

☐ sensitivity (true positive rate, recall)

deviance

cross-entropy

O accuracy (proportion of correctly predicted outputs)

AUC and ROC

root mean squared error

☐ specificity (true negative rate)

☐ false positive rate

SAVE

AI-Generated Solution

info

AI-generated content may present inaccurate or offensive content that does not represent bartleby’s views.

Unlock instant AI solutions

Tap the button

to generate a solution

to generate a solution

Click the button to generate

a solution

a solution

Knowledge Booster

Similar questions

- don't copyarrow_forwardIn a fraud detection scenario where the problem is to predict if a transaction is fraud or not, a model provides predictions that correctly identify fraudulent transactions 94% of the time. Is this a good model? Yes, this model identifies fraud with a very high percentage. Not sure until we know about the false positive rate, ROC, or other metrics that capture the false positive rate as well. No, this is the incorrect evaluation metric to use. The ASE of the test set is more appropriate for this type of problem.arrow_forwardWhich of the following evaluation metrics can be used to evaluate a model with categorical output variable with exactly two categories? (check all that apply) ☐ specificity (true negative rate) ☐ accuracy (proportion of correctly predicted outputs) deviance O AUC and ROC ☐ false positive rate root mean squared error ☐ sensitivity (true positive rate, recall) ☐ cross-entropyarrow_forward

- The lower the misclassification rate, the better the predictive performance of a logistic regression model. True False PLEASE ANSWER CORRECTLYarrow_forwardWhich statement about k-fold cross-validation is FALSE? Group of answer choices is typically used to tune and select the best hyper-parameters for the model On each step, one fold is used as the training data and the remaining k − 1 folds are used as testing data partitions the data into k non-overlapping folds The last step of the k-fold cross-validation is to compute the average performance estimate All observations are used for both training and validationarrow_forwardPLEASE ANSWER IT FAST AND BE CORRECT with explanation , I WILL RATE FULLY What does the CLI option do on the MODEL statement of an MLR analysis in PROC GLM? a. Produce prediction intervals for the slope parameters. b. Produce confidence intervals for the slope parameters. c. Produce confidence intervals for the mean response at all predictor combinations in the dataset. d. Produce prediction intervals for a future response at all predictor combinations in the dataset.arrow_forward

- just give me correct answer within 10 mins I'll give you multiple upvotearrow_forwardMake sure to show it as steps on a python with the final answer Fit a decision tree model using the training dataset (`x_train` and `y_train`)Create a variable named `y_pred`. Make predictions using the `x_test` variable and save these predictions to the y_pred variable Create a variable called `dt_accuracy`. Compute the accuracy rate of the logistic regression model using the `y_pred` and `y_test` and assign it to the `dt_accuracy` variablearrow_forwardQuestion # 1: Consider the following data of Regression Model where YACTUAL is your actual observation and YPREDICTION is the model prediction value. You have to use the data and Compute the value of the following errors: Mean Absolute Error, Mean Relative Error and Prediction (X) when X ≥ 30% Value of R2 YACTUAL 12.6 9.8 9.6 9.9 11.5 11.2 12.3 9.5 9.7 12.4 YPREDICTED 12.8 9.1 9.7 9.9 11.3 12.1 12.0 9.8 9.6 12.2arrow_forward

- Which of the following model should be used to show a single outcome with quantitative input values has no randomness? a. The threshold model O b. The deterministic model O. The statistical model d. The stochastic modelarrow_forwardYou have trained a logistic regression classifier and planned to make predictions according to: Predict y=1 if ho(x) 2 threshold Predict y=0 if ho (x) < threshold For different threshold values, you get different values of precision (P) and recall (R). Which of the following is a reasonable way to pick the threshold value? O a Measure precision (P) and recall (R) on the test set and choose the value of P+R threshold which maximizes 2 Ob Measure precision (P) and recall (R) on the cross validation set and choose the P+R value of threshold which maximizes 2 Measure precision (P) and recall (R) on the cross validation set and choose the PR value of threshold which maximizes 2 P+R Measure precision (P) and recall (R) on the test set and choose the value of PR threshold which maximizes 2 P+Rarrow_forwardGradient Descent algorithmarrow_forward

arrow_back_ios

SEE MORE QUESTIONS

arrow_forward_ios