Concept explainers

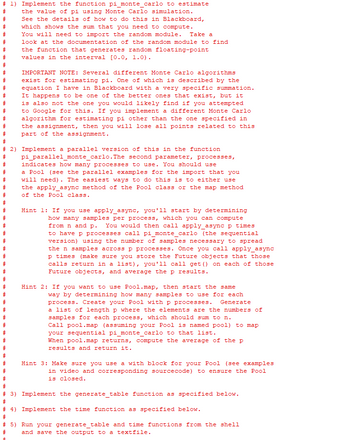

Follow the instructions in the screenshots provided carefully to implement the code below in python 3.10 or later, please.

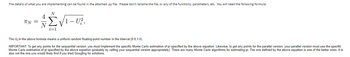

def pi_monte_carlo(n) :

"""Computes and returns an estimation of pi

using Monte Carlo simulation.

Keyword arguments:

n - The number of samples.

"""

pass

def pi_parallel_monte_carlo(n, p=4) :

"""Computes and returns an estimation of pi

using a parallel Monte Carlo simulation.

Keyword arguments:

n - The total number of samples.

p - The number of processes to use.

"""

# You can distribute the work across p

# processes by having each process

# call the sequential version, where

# those calls divide the n samples across

# the p calls.

# Once those calls return, simply average

# the p partial results and return that average.

pass

def generate_table() :

"""This function should generate and print a table

of results to demonstrate that both versions

compute increasingly accurate estimations of pi

as n is increased. It should use the following

values of n = {12, 24, 48, ..., 12582912}. That is,

the first value of n is 12, and then each subsequent

n is 2 times the previous. The reason for starting at 12

is so that n is always divisible by 1, 2, 3, and 4.

The first

column should be n, the second column should

be the result of calling piMonteCarlo(n), and you

should then have 4 more columns for the parallel

version, but with 1, 2, 3, and 4 processes in the Pool."""

pass

def time() :

"""This function should generate a table of runtimes

using timeit. Use the same columns and values of

n as in the generate_table() function. When you use timeit

for this, pass number=1 (because the high n values will be slow)."""

pass

Trending nowThis is a popular solution!

Step by stepSolved in 4 steps with 2 images

How do I get this code to output the data into a text file?

How do I get this code to output the data into a text file?

- Write a python program whose input is two integers and whose output is the two integers swapped. Ex: If the input is: 3 8 the output is: 8 3 Your program must define and call the following function. swap_values() returns the two values in swapped order.def swap_values(user_val1, user_val2) I can get the first input to reverse but the subsequent outputs I am struggling to make work.arrow_forwardJava programming: What's the output of the following program... a.) Compilation Error b.) 97~B~C~D~E~102~G~ c.) 65~b~C~d~E~70~G~ d.) a~66~C~68~E~f~G~arrow_forwardCan someone help me with this program in python? Using this code how can I use the stopwatch module to filll out this table Use the stopwatch module to estimate how long your program runs. stake - initial amount you start with goal - the amount you want to end with (you will either lose it all or make your goal) trials - the number of times you want to run the experiment - run the experiment 100 times to get a more accurate estimate of the probability. Do any empirical analysis using the doubling method. Start with the stake = 500 and goal 1000 and continue to double until the stake = 1000000.arrow_forward

- There are given code: # TODO: Import math module def quadratic_formula(a, b, c):# TODO: Compute the quadratic formula results in variables x1 and x2return (x1, x2) def print_number(number, prefix_str):if float(int(number)) == number:print("{}{:.0f}".format(prefix_str, number))else:print("{}{:.2f}".format(prefix_str, number)) if __name__ == "__main__":input_line = input()split_line = input_line.split(" ")a = float(split_line[0])b = float(split_line[1])c = float(split_line[2])solution = quadratic_formula(a, b, c)print("Solutions to {:.0f}x^2 + {:.0f}x + {:.0f} = 0".format(a, b, c))print_number(solution[0], "x1 = ")print_number(solution[1], "x2 = ")arrow_forwardCan you use Python programming language to wirte this code? Thank you very much!arrow_forwardAnswer the given question with a proper explanation and step-by-step solution. In python languagearrow_forward

- n this lab, you complete a prewritten Python program for a carpenter who creates personalized house signs. The program is supposed to compute the price of any sign a customer orders, based on the following facts: The charge for all signs is a minimum of $35.00. The first five letters or numbers are included in the minimum charge; there is a $4 charge for each additional character. If the sign is make of oak, add $20.00. No charge is added for pine. Black or white characters are included in the minimum charge; there is an additional $15 charge for gold-leaf lettering.arrow_forwardA machine code before loading into the loader on pep/9 simulator is given below. Please show the program before entering the assembler and give a brief description of the overall purpose of the program. D1 FC 15 E1 00 16 D1 FC 15 61 00 16 81 00 18 91 00 1A F1 FC 16 00 00 00 00 OF 00 30 zzarrow_forwardCan you use Python programming language to wirte this code? Thank you very much!arrow_forward

Database System ConceptsComputer ScienceISBN:9780078022159Author:Abraham Silberschatz Professor, Henry F. Korth, S. SudarshanPublisher:McGraw-Hill Education

Database System ConceptsComputer ScienceISBN:9780078022159Author:Abraham Silberschatz Professor, Henry F. Korth, S. SudarshanPublisher:McGraw-Hill Education Starting Out with Python (4th Edition)Computer ScienceISBN:9780134444321Author:Tony GaddisPublisher:PEARSON

Starting Out with Python (4th Edition)Computer ScienceISBN:9780134444321Author:Tony GaddisPublisher:PEARSON Digital Fundamentals (11th Edition)Computer ScienceISBN:9780132737968Author:Thomas L. FloydPublisher:PEARSON

Digital Fundamentals (11th Edition)Computer ScienceISBN:9780132737968Author:Thomas L. FloydPublisher:PEARSON C How to Program (8th Edition)Computer ScienceISBN:9780133976892Author:Paul J. Deitel, Harvey DeitelPublisher:PEARSON

C How to Program (8th Edition)Computer ScienceISBN:9780133976892Author:Paul J. Deitel, Harvey DeitelPublisher:PEARSON Database Systems: Design, Implementation, & Manag...Computer ScienceISBN:9781337627900Author:Carlos Coronel, Steven MorrisPublisher:Cengage Learning

Database Systems: Design, Implementation, & Manag...Computer ScienceISBN:9781337627900Author:Carlos Coronel, Steven MorrisPublisher:Cengage Learning Programmable Logic ControllersComputer ScienceISBN:9780073373843Author:Frank D. PetruzellaPublisher:McGraw-Hill Education

Programmable Logic ControllersComputer ScienceISBN:9780073373843Author:Frank D. PetruzellaPublisher:McGraw-Hill Education