A First Course in Probability (10th Edition)

10th Edition

ISBN: 9780134753119

Author: Sheldon Ross

Publisher: PEARSON

expand_more

expand_more

format_list_bulleted

Question

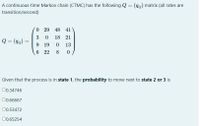

Transcribed Image Text:A continuous-time Markov chain (CTMC) has the following Q= (gij) matrix (all rates are

transition/second)

O 29 48 41

3

18 21

Q = (dij)

9.

19

13

6 22

8

Given that the process is in state 1, the probability to move next to state 2 or 3 is

00.34746

00.66667

00.53472

0.65254

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution

Trending nowThis is a popular solution!

Step by stepSolved in 2 steps with 1 images

Knowledge Booster

Learn more about

Need a deep-dive on the concept behind this application? Look no further. Learn more about this topic, probability and related others by exploring similar questions and additional content below.Similar questions

- 1. A machine can be in one of four states: 'running smoothly' (state 1), 'running but needs adjustment' (state 2), 'temporarily broken' (state 3), and 'destroyed' (state 4). Each morning the state of the machine is recorded. Suppose that the state of the machine tomorrow morning depends only on the state of the machine this morning subject to the following rules. • If the machine is running smoothly, there is 1% chance that by the next morning it will have exploded (this will destroy the machine), there is also a 9% chance that some part of the machine will break leading to it being temporarily broken. If neither of these things happen then the next morning there is an equal probability of it running smoothly or running but needing adjustment. • If the machine is temporarily broken in the morning then an engineer will attempt to repair the machine that day, there is an equal chance that they succeed and the machine is running smoothly by the next day or they fail and cause the machine…arrow_forward7arrow_forwardA Markov Chain has the transition matrix 1 P = and currently has state vector ½ ½ . What is the probability it will be in state 1 after two more stages (observations) of the process? (A) 112 (B) % (C) %36 (D) 12 (E) % (F) 672 (G) 3/6 (H) "/2arrow_forward

- Give me right solution according to the questionarrow_forward(Transition Probabilities)must be about Markov Chain. Any year on a planet in the Sirius star system is either economic growth or recession (constriction). If there is growth for one year, there is 70% probability of growth in the next year, 10% probability recession is happening. If there is a recession one year, there is a 30% probability of growth and a 60% probability of recession the next year. (a) If recession is known in 2263, find the probability of growth in 2265. (b) What is the probability of a recession on the planet in the year Captain Kirk and his crew first visited the planet? explain it to someone who does not know anything about the subjectarrow_forwardProblem: Construct an example of a Markov chain that has a finite number of states and is not recurrent. Is your example that of a transient chain?arrow_forward

- A factory worker will quit with probability 1/2 during her first month, with probability 1/4 during her second month and with probability 1/8 after that. Whenever someone quits, their replacement will start at the beginning of the next month. Model the status of each position as a Markov chain with 3 states. Identify the states and transition matrix. Write down the system of equations determining the long-run proportions. Suppose there are 900 workers in the factory. Find the average number of the workers who have been there for more than 2 months.arrow_forwardSuppose that a Markov chain with 3 states and with transition matrix P is in state 3 on the first observation. Which of the following expressions represents the probability that it will be in state 1 on the third observation? (A) the (3, 1) entry of P3 (B) the (1,3) entry of P3 (C) the (3, 1) entry of Pª (D) the (1,3) entry of P2 (E) the (3, 1) entry of P (F) the (1,3) entry of P4 (G) the (3, 1) entry of P2 (H) the (1,3) entry of Parrow_forwardConsider the mouse in the maze shown to the right that includes "one-way" doors. Show that q (also given to the right), is a steady state vector for the associated Markov chain, and interpret this result in terms of the mouse's travels through the maze. To show that q is a steady-state vector for the Markov chain, first find the transition matrix. The transition matrix is P= 0. (Type integers or simplified fractions for any values in the matrix.) 4 2 3 5 6 q= 0arrow_forward

- Consider a Markov chain with two possible states, S = {0, 1}. In particular, suppose that the transition matrix is given by Show that pn = 1 P = [¹3 В -x [/³² x] + B x +B[B x] x 1- B] (1-x-B)¹ x +B x -|- -B ·X₁ Вarrow_forward5arrow_forwardSuppose the transition matrix for a Markov Chain is T = stable population, i.e. an x0₂ such that Tx = x. ساله داد Find a non-zeroarrow_forward

arrow_back_ios

SEE MORE QUESTIONS

arrow_forward_ios

Recommended textbooks for you

A First Course in Probability (10th Edition)ProbabilityISBN:9780134753119Author:Sheldon RossPublisher:PEARSON

A First Course in Probability (10th Edition)ProbabilityISBN:9780134753119Author:Sheldon RossPublisher:PEARSON

A First Course in Probability (10th Edition)

Probability

ISBN:9780134753119

Author:Sheldon Ross

Publisher:PEARSON