Elementary Linear Algebra (MindTap Course List)

8th Edition

ISBN: 9781305658004

Author: Ron Larson

Publisher: Cengage Learning

expand_more

expand_more

format_list_bulleted

Question

1. Is this null hypothesis always testable? Why or why not?

2. Consider the case that this null hypothesis is testable. Construct a

statistical test and its rejection region for H0 .

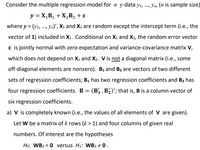

Transcribed Image Text:Consider the multiple regression model for n y-data yı, ., Yn, (n is sample size)

....

y = X,B1 + X,B2 + ɛ

where y = (y1, ., Yn)', X1 and X2 are random except the intercept term (i.e., the

vector of 1) included in X1. Conditional on X1 and X2, the random error vector

ɛ is jointly normal with zero expectation and variance-covariance matrix V,

which does not depend on X1 and X2. V is not a diagonal matrix (i.e., some

off-diagonal elements are nonzero). B1 and B2 are vectors of two different

sets of regression coefficients; B1 has two regression coefficients and B2 has

four regression coefficients. B = (B1 , B½)'; that is, B is a column vector of

six regression coefficients.

a) V is completely known (i.e., the values of all elements of V are given).

Let W be a matrix of k rows (k > 1) and four columns of given real

numbers. Of interest are the hypotheses

Họ: WB2 = 0 versus H1: WB2 ÷ 0 .

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

This is a popular solution

Trending nowThis is a popular solution!

Step by stepSolved in 2 steps with 2 images

Knowledge Booster

Similar questions

- The least-squares regression line relating two statistical variables is given as = 24 + 5x. Compute the residual if the actual (observed) value for y is 38 when x is 2. 4 38 2arrow_forwardSuppose that Y is normal and we have three explanatory unknowns which are also normal, and we have an independent random sample of 11 members of the population, where for each member, the value of Y as well as the values of the three explanatory unknowns were observed. The data is entered into a computer using linear regression software and the output summary tells us that R-square is 0.72, the linear model coefficient of the first explanatory unknown is 7 with standard error estimate 2.5, the coefficient for the second explanatory unknown is 11 with standard error 2, and the coefficient for the third explanatory unknown is 15 with standard error 4. The regression intercept is reported as 28. The sum of squares in regression (SSR) is reported as 72000 and the sum of squared errors (SSE) is 28000. From this information, what is MSE/MST? (a) .4000 (b) .3000 (c) .5000 (d) .2000 (e) NONE OF THE OTHERSarrow_forwarda. Show that the regression R2 in the regression of Y on X is the squaredvalue of the sample correlation between X and Y. That is, show thatR2 = r2XY.b. Show that the R2 from the regression of Y on X is the same as the R2from the regression of X on Y. c. Show that ^β1 = rXY (sY/sX), where rXY is the sample correlationbetween X and Y, and sX and sY are the sample standard deviationsof X and Y.arrow_forward

- Suppose that Y is normal and we have three explanatory unknowns which are also normal, and we have an independent random sample of 21 members of the population, where for each member, the value of Y as well as the values of the three explanatory unknowns were observed. The data is entered into a computer using linear regression software and the output summary tells us that R-square is 0.9, the linear model coefficient of the first explanatory unknown is 7 with standard error estimate 2.5, the coefficient for the second explanatory unknown is 11 with standard error 2, and the coefficient for the third explanatory unknown is 15 with standard error 4. The regression intercept is reported as 28. The sum of squares in regression (SSR) is reported as 90000 and the sum of squared errors (SSE) is 10000. From this information, what is the number of degrees of freedom for the t-distribution used to compute critical values for hypothesis tests and confidence intervals for the individual model…arrow_forwardSuppose that Y is normal and we have three explanatory unknowns which are also normal, and we have an independent random sample of 21 members of the population, where for each member, the value of Y as well as the values of the three explanatory unknowns were observed. The data is entered into a computer using linear regression software and the output summary tells us that R-square is 0.8, the linear model coefficient of the first explanatory unknown is 7 with standard error estimate 2.5, the coefficient for the second explanatory unknown is 11 with standard error 2, and the coefficient for the third explanatory unknown is 15 with standard error 4. The regression intercept is reported as 28. The sum of squares in regression (SSR) is reported as 80000 and the sum of squared errors is (SSE) 20000. From this information, what is the value of the hypothesis test statistic for evidence that the true value of the coefficient of the second explanatory unknown exceeds 5? (a) 4 (b) 3…arrow_forwardSuppose that Y is normal and we have three explanatory unknowns which are also normal, and we have an independent random sample of 16 members of the population, where for each member, the value of Y as well as the values of the three explanatory unknowns were observed. The data is entered into a computer using linear regression software and the output summary tells us that R-square is 45/62, the linear model coefficient of the first explanatory unknown is 7 with standard error estimate 2.5, the coefficient for the second explanatory unknown is 11 with standard error 2, and the coefficient for the third explanatory unknown is 15 with standard error 4. The regression intercept is reported as 28. The sum of squares in regression (SSR) is reported as 90000 and the sum of squared errors (SSE) is 34000. From this information, what is the critical value needed to calculate the margin of error for a 95 percent confidence interval for one of the model coefficients? (a) 2.069 (b) 2.110 (c)…arrow_forward

- A regression and correlation analysis resulted in the following information regarding a dependent variable (y) and an independent variable (x). Σx = 90 Σ(y - )(x - ) = 466 Σy = 170 Σ(x - )2 = 234 n = 10 Σ(y - )2 = 1434 SSE = 505.98 The least squares estimate of the slope or b1 equals a. .923. b. 1.991. c. -1.991. d. -.923.arrow_forwardThe table below shows the numbers of new-vehicle sales for company 1 and company 2 for 11 years. Construct and interpret a 90% prediction interval for new vehicle sales for company 2 when the number of new vehicles sold by company 1 is 2687 thousand. The equation of the regression line is y = 1.207x + 398.378 "We can be 90% confident that when the new-vehicle sales for company 1 is 2687 thousand, the new-vehicle sales for company 2 will be between ___ and ___ thousand."arrow_forward10) The following results are from a regression where the dependent variable is COST OF COLLEGE and the independent variables are TYPE OF SCHOOL which is a dummy variable = 0 for public schools and = 1 for private schools, FIRST QUARTILE SAT which is the average score of students in the top quartile of SAT’s, THIRD QUARTILE SAT which is the average score of students in the 3rd quartile, and ROOM AND BOARD which is the cost of room and board at the school. The first set of results includes all the independent variables whereas the second set of results excludes the THIRD QUARTILE SAT variable. a) Based on these two sets of data, does there appear that multicollinearity is a problem (specifically, does it appear that THIRD QUARTILE SAT is highly collinear with the other independent variables? Explain. b) Calculate the VIF for THIRD QUARTILE SAT. c) Based on the VIF, do you think that multicollinearity is a problem? Explain.arrow_forward

- Curing times in days (x) and compressive strengths in MPa (V) were recorded for several concrete specimens. The means and standard deviations of the x and y values were * = 5, s, = 2, 5 = 1350, s, = 100. The correlation between curing time and compressive strength was computed to be r = 0.7. Find the equation of the least-squares line to predict compressive strength from curing time.arrow_forward3arrow_forwardA trucking company considered a multiple regression model for relating the dependent variable of total daily travel time for one of its drivers (hours) to the predictors distance traveled (miles) and the number of deliveries of made. After taking a random sample, a multiple regression was performed and the output is given below. Interpret the slope of the deliveries variable. When deliveries increases by 0.805 units, time increases by 1 hour, holding all other variables constant. 2) We do not have enough information to say. 3) When deliveries increases by 1 unit, time decreases by 0.805 hours, holding all other variables constant. 4) When deliveries decreases by 1 unit, time increases by 0.805 hours, holding all other variables constant. 5) When deliveries increases by 1 unit, time increases by 0.805 hours, holding all other variables constant.arrow_forward

arrow_back_ios

SEE MORE QUESTIONS

arrow_forward_ios

Recommended textbooks for you

Elementary Linear Algebra (MindTap Course List)AlgebraISBN:9781305658004Author:Ron LarsonPublisher:Cengage Learning

Elementary Linear Algebra (MindTap Course List)AlgebraISBN:9781305658004Author:Ron LarsonPublisher:Cengage Learning Big Ideas Math A Bridge To Success Algebra 1: Stu...AlgebraISBN:9781680331141Author:HOUGHTON MIFFLIN HARCOURTPublisher:Houghton Mifflin Harcourt

Big Ideas Math A Bridge To Success Algebra 1: Stu...AlgebraISBN:9781680331141Author:HOUGHTON MIFFLIN HARCOURTPublisher:Houghton Mifflin Harcourt Linear Algebra: A Modern IntroductionAlgebraISBN:9781285463247Author:David PoolePublisher:Cengage Learning

Linear Algebra: A Modern IntroductionAlgebraISBN:9781285463247Author:David PoolePublisher:Cengage Learning Glencoe Algebra 1, Student Edition, 9780079039897...AlgebraISBN:9780079039897Author:CarterPublisher:McGraw Hill

Glencoe Algebra 1, Student Edition, 9780079039897...AlgebraISBN:9780079039897Author:CarterPublisher:McGraw Hill

Elementary Linear Algebra (MindTap Course List)

Algebra

ISBN:9781305658004

Author:Ron Larson

Publisher:Cengage Learning

Big Ideas Math A Bridge To Success Algebra 1: Stu...

Algebra

ISBN:9781680331141

Author:HOUGHTON MIFFLIN HARCOURT

Publisher:Houghton Mifflin Harcourt

Linear Algebra: A Modern Introduction

Algebra

ISBN:9781285463247

Author:David Poole

Publisher:Cengage Learning

Glencoe Algebra 1, Student Edition, 9780079039897...

Algebra

ISBN:9780079039897

Author:Carter

Publisher:McGraw Hill