Concept explainers

Neural network regression of car-prices

We assume that the car prices (y) can be predicted as ?=?(?) where ?=[?0..?9..] are the car features and ? is a three layer fully connected neural network. We first create the matrix X_train, whose columns are the normalized training features.

Create a three layer neural network with hidden layers of h1 and h2 neurons respectively. Use RELU activations at the hidden layers and no activation on the output layer.

class Net(nn.Module):

def __init__(self,Nfeatures,Noutput,Nh1=10,Nh2=10):

super(Net, self).__init__()

# YOUR CODE HERE

def forward(self, x):

# YOUR CODE HERE

featureScale = np.max(np.abs(X_train),axis=0,keepdims=True)

X_train_T = X_train/featureScale

X_train_T = torch.tensor(X_train_T.astype(np.float32))

ymax = np.max(y_train,axis=0,keepdims=True)

y_train_T = torch.tensor(y_train.astype(np.float32)/ymax).unsqueeze(1)

Training

YOUR CODE BELOW

- Define a network with 20 features/neurons in hidden layer 1 and 25 in layer 2

- Define optimizer to be SGD

- Define the loss as the norm of the error

- Train the network for 10,000 epochs with a learning rate of 1e-3

net = Net(16,1,50,50)

out = net(X_train_T)

# YOUR CODE HERE

for epoch in range(10000):

#YOUR CODE HERE

if(np.mod(epoch,1000)==0):

print("Error =",error.detach().cpu().item())

fig,ax = plt.subplots(1,1,figsize=(12,4))

ax.plot(y_train_T.abs().detach().cpu(),label='Actual Price')

ax.plot(out.abs().detach().cpu(),label='Prediction')

ax.legend()

plt.show()

Trending nowThis is a popular solution!

Step by stepSolved in 2 steps

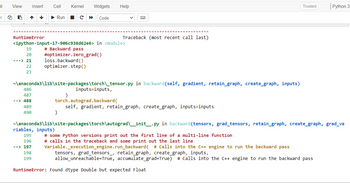

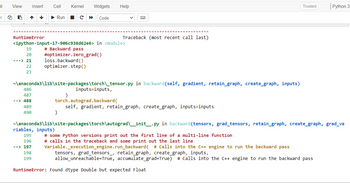

I am getting an error as below:

RuntimeError: Found dtype Double but expected Float

I am getting an error as below:

RuntimeError: Found dtype Double but expected Float

- Suppose we are fitting a neural network with three hidden layers to a training set. It is found that the cross validation error Jcv(0) is much larger than the training error Jtrain (0). Should we increase the number of hidden layers?arrow_forwardtrain an artificial neural network using CIFAR10 dataset. You can get the dataset from Keras similar to mnist dataset - try to find the best performing model for your dataset (CIFAR-10), use the splitting for train/val/test as 80/10/10 attach the screenshot of code and outputarrow_forwardConsider the following perceptron with the activation function f(I)=arctan(I-0.6). Use delta learning rule toupdate the weights w1 and w2 for the training data x=[1, 1] and D=1. (Choose k=0.2) it wont let me post the screenshot of the model but the w1 is 0.2 and w2 is 0.4arrow_forward

- Please show the steps to solve as wellarrow_forwardExercise 10: Assume that you are trying to solve a binary classification problem with a feed-forward neural network. Assume that the training set has 1250 observations and 5 input features. Finally, assume that the network has only one layer of hidden neurons, and that layer contains 3 neurons. What is the total number of connections of the network? (Remark: to answer this question, you have to take into account also the threshold connections of each neuron). Answer 1. 9 Answer 2. 5 Answer 3. 22 Answer 4. 18 Answer 5. 1255 Answer 6. 3 Answer 7. 1262 Answer 8. None of the other alternatives. Answer 9. 13 Answer 10. 1250 Answer 11. 20 Answer 12. 1258arrow_forwardNeural network regression of car-prices We assume that the car prices (y) can be predicted as ?=?(?) where ?=[?0..?9..] are the car features and ? is a three layer fully connected neural network. We first create the matrix X_train, whose columns are the normalized training features. Create a three layer neural network with hidden layers of h1 and h2 neurons respectively. Use RELU activations at the hidden layers and no activation on the output layer. class Net(nn.Module): def __init__(self,Nfeatures,Noutput,Nh1=10,Nh2=10): super(Net, self).__init__() # YOUR CODE HERE def forward(self, x): # YOUR CODE HEREarrow_forward

- Suppose you are fitting a neural network with one hidden layer to a training set. You find that the cross validation error Jcv (0) is much larger than the training error Jtrain (0). Is increasing the number of hidden units likely to help? CV Yes, because it is currently suffering from high bias. Ob. Yes, because this increases the number of parameters and lets the network represent more complex functions. Oc. No, because it is currently suffering from high variance, so adding hidden units is unlikely to help. Od. No, because it is currently suffering from high bias, so adding hidden units is unlikely to help.arrow_forwarddont post unnecessary answer dont answer if you dont know else direct dislike dont post copied answerarrow_forward10arrow_forward

Database System ConceptsComputer ScienceISBN:9780078022159Author:Abraham Silberschatz Professor, Henry F. Korth, S. SudarshanPublisher:McGraw-Hill Education

Database System ConceptsComputer ScienceISBN:9780078022159Author:Abraham Silberschatz Professor, Henry F. Korth, S. SudarshanPublisher:McGraw-Hill Education Starting Out with Python (4th Edition)Computer ScienceISBN:9780134444321Author:Tony GaddisPublisher:PEARSON

Starting Out with Python (4th Edition)Computer ScienceISBN:9780134444321Author:Tony GaddisPublisher:PEARSON Digital Fundamentals (11th Edition)Computer ScienceISBN:9780132737968Author:Thomas L. FloydPublisher:PEARSON

Digital Fundamentals (11th Edition)Computer ScienceISBN:9780132737968Author:Thomas L. FloydPublisher:PEARSON C How to Program (8th Edition)Computer ScienceISBN:9780133976892Author:Paul J. Deitel, Harvey DeitelPublisher:PEARSON

C How to Program (8th Edition)Computer ScienceISBN:9780133976892Author:Paul J. Deitel, Harvey DeitelPublisher:PEARSON Database Systems: Design, Implementation, & Manag...Computer ScienceISBN:9781337627900Author:Carlos Coronel, Steven MorrisPublisher:Cengage Learning

Database Systems: Design, Implementation, & Manag...Computer ScienceISBN:9781337627900Author:Carlos Coronel, Steven MorrisPublisher:Cengage Learning Programmable Logic ControllersComputer ScienceISBN:9780073373843Author:Frank D. PetruzellaPublisher:McGraw-Hill Education

Programmable Logic ControllersComputer ScienceISBN:9780073373843Author:Frank D. PetruzellaPublisher:McGraw-Hill Education