Question

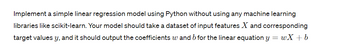

Transcribed Image Text:Implement a simple linear regression model using Python without using any machine learning

libraries like scikit-learn. Your model should take a dataset of input features X and corresponding

target values y, and it should output the coefficients w and b for the linear equation y =wX + b

Expert Solution

This question has been solved!

Explore an expertly crafted, step-by-step solution for a thorough understanding of key concepts.

Step by stepSolved in 4 steps with 5 images

Knowledge Booster

Similar questions

- For a given mathematical model, which gives merely a representation of the real situation, there exists an optimum solution. O Yes/No/True/Falsearrow_forwardIn the language R: Which of the following functions in the broom library can generate a data frame of component-level information (e.g., coefficients, t-statistics) from a linear regression model? Select one: A. glance() B. augment() C. round_df() D. tidy()arrow_forwardWe use machine learning to solve problems if they satisfy three conditions. What are these conditions?arrow_forward

- In R, write a function that produces plots of statistical power versus sample size for simple linear regression. The function should be of the form LinRegPower(N,B,A,sd,nrep), where N is a vector/list of sample sizes, B is the true slope, A is the true intercept, sd is the true standard deviation of the residuals, and nrep is the number of simulation replicates. The function should conduct simulations and then produce a plot of statistical power versus the sample sizes in N for the hypothesis test of whether the slope is different than zero. B and A can be vectors/lists of equal length. In this case, the plot should have separate lines for each pair of A and B values (A[1] with B[1], A[2] with B[2], etc). The function should produce an informative error message if A and B are not the same length. It should also give an informative error message if N only has a single value. Demonstrate your function with some sample plots. Find some cases where power varies from close to zero to near…arrow_forwardWe all know that when the temperature of a metal increases, it begins to expand. So, we experimented with exposing a metal rod to different temperatures and recorded its length as follows: 20 Length Temp 25 30 35 40 45 50 55 60 65 0.5 1.8 5 6.2 6.5 7.8 9.4 9.8 10.9 Required: 1. Implement and plot a simple linear regression for the above data, where the temperature is “x", and the length is "y" 2. Implement and plot a multiple linear regression "Polynomial regression" with different degrees. For example, Degree of 3: Y = w,x' + w2x? + W3x³ + W4 Where w4 represents bias. *you can use normal equation to calculate 'W' as follow: W (X".X)².(X".Y) Then calculate Y, Where Y = X.WT 3. Try degree of 2, 3, 5, and 8arrow_forwardWrite a program in Scilab that samples f (x )=3e^−x sinx at x=0,1,2,3,4,5 and then, on the same graph, plots nearest-neighbor, linear and spline interpolations of these data using the interp1 function. The interpolation plots should have 200 samples uniformly spaced over 0≤x≤5 .arrow_forward

- Consider a plot of a model of the form Y i = B 0 +B1T i + B2(X 1i-C) + e i. Which of the following is true? A. B2 is the bump at the cutoff B. B2 is the slope of the line C. B1 is the slope of the line D. B0 is the bump at the cutoffarrow_forwardLogistic regression aims to train the parameters from the training set D = {(x(i),y(i)), i 1,2,...,m, y ¤ {0,1}} so that the hypothesis function h(x) = g(0¹ x) 1 (here g(z) is the logistic or sigmod function g(z) can predict the probability of a 1+ e-z new instance x being labeled as 1. Please derive the following stochastic gradient ascent update rule for a logistic regression problem. 0j = 0j + a(y(¹) — hz(x)))x; ave. =arrow_forwardAssume the following simple regression model, Y = β0 + β1X + ϵ ϵ ∼ N(0, σ^2 ) Now run the following R-code to generate values of σ^2 = sig2, β1 = beta1 and β0 = beta0. Simulate the parameters using the following codes: Code: # Simulation ## set.seed("12345") beta0 <- rnorm(1, mean = 0, sd = 1) ## The true beta0 beta1 <- runif(n = 1, min = 1, max = 3) ## The true beta1 sig2 <- rchisq(n = 1, df = 25) ## The true value of the error variance sigmaˆ2 ## Multiple simulation will require loops ## nsample <- 10 ## Sample size n.sim <- 100 ## The number of simulations sigX <- 0.2 ## The variances of X # # Simulate the predictor variable ## X <- rnorm(nsample, mean = 0, sd = sqrt(sigX)) Q1 Fix the sample size nsample = 10 . Here, the values of X are fixed. You just need to generate ϵ and Y . Execute 100 simulations (i.e., n.sim = 100). For each simulation, estimate the regression coefficients (β0, β1) and the error variance (σ 2 ). Calculate the mean of…arrow_forward

- Prog&Numrecal analysis MATLAB**arrow_forwardIn bivariate regression, the regression coefficient will be equal to rXY when:arrow_forwardA machine learning model is learning parameters w₁ and w₂. It turns out that for the latest data inputted, the error function is: E=(w₁-1)²+(W₂-1)² The current values of the parameters are w₁-2 and w₂=0.9. Gradient descent is applied to update the parameters. Which statement is true? 7 0 w1 2 W2 3 4 0 W2 W1 decreases and decreases, change in w₁ is bigger. W₁ decreases and w₂ increases, change in w₂ is bigger. W₁ decreases and w₂ increases, change in w₁ is bigger. W₁ decreases and w₂ increases, both by the same amount. 18 16 14 12 10 8 6 4 HH +++ 2arrow_forward

arrow_back_ios

arrow_forward_ios