Oh no! Our experts couldn't answer your question.

Don't worry! We won't leave you hanging. Plus, we're giving you back one question for the inconvenience.

Submit your question and receive a step-by-step explanation from our experts in as fast as 30 minutes.

You have no more questions left.

Message from our expert:

Our experts are unable to provide you with a solution at this time. Try rewording your question, and make sure to submit one question at a time. A question credit has been added to your account for future use.

Your Question:

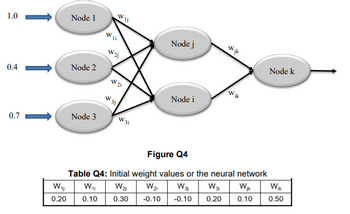

Figure Q4 shows a fully connected feed-forward neural network, being fed with a single input instance of [1.0, 0.4, 0.7]. Initial weight values for the network are given in Table Q4.

(Figure Q4)

(Table Q4: Initial weight values or the neural network)

Calculate the output value for the given input. In your answer, use the Rectified Linear Unit (ReLU) as the activation function.

Transcribed Image Text:1.0

0.4

0.7

W₁1

0.20

Node 1

Node 2

Node 3

W₁ li

Wij

W2j

W

3i

Node j

Node i

Wijk

ik

Node k

Figure Q4

Table Q4: Initial weight values or the neural network

W31 W31 Wik

W₁i W₂1

Wzi

Wik

0.10

0.30 -0.10

-0.10 0.20 0.10 0.50